Cybersecurity in Smart Cities

Protecting the Digital Foundations of Urban Life

Urban life is changing at a pace never seen before. Cities are no longer just concrete structures and transportation systems. They are becoming highly connected ecosystems powered by data and digital technology. Smart cities promise improved efficiency, sustainability, and convenience through connected infrastructure, intelligent traffic systems, and digital public services. But with this transformation comes a critical challenge: cybersecurity.

What Makes a City Smart

A smart city integrates technology into its core functions. Sensors monitor traffic flow, smart grids optimize energy distribution, and connected services manage everything from water supply to waste disposal. Data collected from these systems enables real-time decision-making, improving quality of life and reducing environmental impact.

However, this connectivity creates an extensive digital footprint. Every device, from streetlights to surveillance cameras, is connected to a network. While this offers tremendous benefits, it also creates potential entry points for cyberattacks.

The Growing Threat Landscape

Cybersecurity threats in smart cities are not hypothetical. They are a growing reality. Attacks can range from disabling traffic lights to disrupting power grids. The consequences are not limited to inconvenience. A well-coordinated cyberattack could paralyze emergency response systems or compromise critical infrastructure, putting lives at risk.

The complexity of smart city networks adds to the challenge. Unlike traditional IT environments, these systems involve a mix of hardware, software, and operational technologies. Many devices have limited processing power, making it difficult to implement advanced security features. As a result, vulnerabilities can remain undetected until exploited.

Key Areas of Concern

Critical Infrastructure

Power grids, water systems, and public transportation networks are central to city operations. Cyberattacks on these systems can cause widespread disruption and economic damage. Securing them requires layered defense strategies, continuous monitoring, and rapid response capabilities.

IoT Devices

The Internet of Things is the backbone of smart cities. Sensors, cameras, and connected meters collect and transmit data constantly. Unfortunately, many IoT devices lack strong security protocols, making them susceptible to hacking. A single compromised device can serve as a gateway to an entire network.

Data Privacy

Smart cities generate massive amounts of data, much of it related to personal behavior and movement. Protecting this information is essential to maintaining public trust. Strong encryption, anonymization techniques, and strict access controls are necessary to safeguard citizen privacy.

Building Resilient Smart Cities

The foundation of cybersecurity in smart cities lies in proactive planning. Security cannot be an afterthought. It must be integrated into every layer of design and deployment. This includes using secure communication protocols, regular vulnerability assessments, and implementing zero-trust architecture, which assumes no device or user is inherently trusted.

Artificial intelligence and machine learning can play a vital role in strengthening defenses. These technologies can detect unusual patterns in network traffic and identify threats before they cause harm. Automated response systems can isolate compromised components, reducing the impact of attacks.

Public awareness and collaboration are also essential. Citizens need to understand the importance of cybersecurity in their daily interactions with smart services. Governments, technology providers, and cybersecurity experts must work together to create standards, share intelligence, and ensure compliance with best practices.

The Role of Policy and Regulation

Governments have a critical role in shaping the cybersecurity framework for smart cities. Clear regulations and enforcement mechanisms are needed to hold vendors and operators accountable for security practices. International cooperation is equally important since cyber threats do not respect borders. Global standards for IoT security, data protection, and network resilience will help reduce vulnerabilities across interconnected systems.

A Vision for the Future

Smart cities represent the future of urban living. They promise cleaner environments, efficient transportation, and improved public services. However, without robust cybersecurity measures, these benefits could come at a cost far greater than anticipated. The goal is not to slow down innovation but to ensure that progress is built on a secure foundation.

At BitPulse, we believe that technology should empower society, not expose it to new risks. As cities become smarter, cybersecurity must become stronger. The choices made today will define not only the safety of digital infrastructure but also the trust of the people who depend on it.

The Future of Energy Storage

Powering a Sustainable World

The global transition toward renewable energy is well underway, driven by a need to reduce carbon emissions and combat climate change. Solar panels and wind turbines have become common sights, but their success depends on more than generating clean electricity. The true challenge lies in storing that energy efficiently. Without reliable storage, renewable power cannot deliver the stability modern societies require. This is why energy storage technology is emerging as one of the most critical components of the future energy landscape.

Why Energy Storage Matters

Unlike traditional power plants that produce electricity on demand, renewable sources such as wind and solar are intermittent. The sun does not always shine, and the wind does not always blow. Without storage, excess energy generated during peak conditions is wasted, and shortages occur when production is low. Energy storage systems bridge this gap by storing surplus electricity for later use, ensuring a steady and dependable power supply.

This capability is essential not only for homes and businesses but also for the stability of entire electrical grids. As renewable energy penetration increases, grid operators must manage fluctuations in supply and demand with greater precision. Energy storage provides the flexibility needed to keep the lights on in a world powered by clean energy.

Current Technologies in Use

Several technologies dominate the energy storage landscape today, each with its strengths and limitations:

Lithium-Ion Batteries

Lithium-ion technology is the most widely adopted solution, powering everything from smartphones to electric vehicles and large-scale energy storage projects. These batteries offer high energy density, fast response times, and declining costs due to advancements in manufacturing. However, concerns remain about resource availability, recycling, and environmental impact.

Pumped Hydro Storage

Pumped hydro storage has been around for decades and remains the largest form of energy storage by capacity. It works by pumping water to an elevated reservoir during periods of low demand and releasing it through turbines when electricity is needed. While effective, this method requires significant land and water resources, making it less feasible in certain regions.

Flow Batteries

Flow batteries store energy in liquid electrolytes housed in external tanks. Their ability to decouple power and energy capacity makes them ideal for long-duration storage. Although still in the early stages of commercialization, flow batteries could play a key role in balancing renewable energy grids.

Thermal Storage

Thermal storage systems capture energy in the form of heat, which can later be converted into electricity or used directly for heating. This approach is particularly useful for industrial applications and concentrated solar power plants.

The Role of Emerging Innovations

While current technologies form the backbone of energy storage, innovation is accelerating. Researchers are exploring solid-state batteries that promise greater safety, higher energy density, and faster charging times. Sodium-ion batteries, which use more abundant materials than lithium, could offer a cost-effective alternative for large-scale deployment.

Hydrogen storage is another promising area. Excess renewable energy can power electrolysis systems that split water into hydrogen and oxygen. The hydrogen can then be stored and later converted back into electricity using fuel cells, providing a versatile solution that also supports transportation and industrial applications.

Economic and Environmental Impact

The economics of energy storage are improving rapidly. Falling battery costs and technological advancements are making large-scale projects financially viable. This progress not only supports the integration of renewable energy but also creates opportunities for job growth and economic development.

Environmental considerations are equally important. Recycling and responsible sourcing of materials such as lithium and cobalt must become standard practice. Policymakers and industry leaders are increasingly focused on creating circular supply chains to minimize the ecological footprint of energy storage systems.

Looking Ahead

Energy storage is not simply a supporting technology for renewables. It is the key to unlocking a fully decarbonized energy system. As innovations mature and costs decline, storage will become a cornerstone of global energy infrastructure, enabling communities to enjoy clean, reliable power around the clock.

At BitPulse, we see energy storage as more than a technical challenge. It is a symbol of progress, representing humanity’s ability to adapt and innovate for a sustainable future. The technologies being developed today will shape the energy systems of tomorrow, influencing everything from transportation to climate policy. In the years ahead, energy storage will determine how quickly and effectively the world transitions to a low-carbon economy.

The Future of Robotics

Intelligent Machines Are Redefining Work and Society

Robotics has always captured the human imagination. From the earliest science fiction stories to industrial automation, the idea of machines performing complex tasks has fascinated innovators and everyday people alike. Today, robotics is no longer a futuristic dream. It is a reality that is transforming industries, reshaping economies, and influencing how humans interact with technology.

From Assembly Lines to Intelligent Systems

The first wave of robotics focused on automating repetitive tasks in manufacturing. These machines were rigid, programmed to perform a single function with precision. They revolutionized production by improving speed and reducing human error, but their capabilities were limited.

Modern robotics is different. Advances in artificial intelligence, machine learning, and sensor technologies have given rise to machines that can adapt, learn, and make decisions. Robots are no longer confined to factories. They operate in hospitals, warehouses, farms, and even homes. This shift represents a new era where robots collaborate with humans rather than simply replacing them.

Applications Across Industries

Healthcare

Robotic systems are making significant contributions to healthcare. Surgical robots allow doctors to perform complex procedures with enhanced precision and minimal invasiveness. Rehabilitation robots help patients recover mobility after injuries, while telepresence robots enable doctors to provide remote consultations in areas with limited access to medical professionals.

Logistics and Warehousing

The rise of e-commerce has fueled demand for faster, more efficient supply chains. Robots equipped with computer vision and AI algorithms now manage inventory, pick and pack products, and navigate warehouse environments autonomously. These innovations reduce operational costs and improve delivery times, setting new standards for the industry.

Agriculture

Agricultural robots are addressing global food challenges by automating planting, irrigation, and harvesting. Equipped with sensors and AI, these machines monitor soil conditions, detect pests, and optimize crop yields, making farming more sustainable and less labor-intensive.

Domestic Assistance

Home robots are becoming increasingly popular for cleaning, security, and companionship. While still evolving, these devices demonstrate how robotics can enhance convenience and improve quality of life in everyday settings.

The Rise of Collaborative Robots

One of the most important trends in robotics is the development of collaborative robots, or cobots. Unlike traditional industrial robots that work in isolation, cobots are designed to operate alongside humans. They assist with tasks that require flexibility and problem-solving, allowing workers to focus on higher-value activities. This collaboration is redefining workplace dynamics, blending human creativity with machine precision.

Challenges in Robotics Adoption

Despite its potential, the widespread adoption of robotics faces several hurdles. Cost remains a major factor, particularly for small and medium-sized businesses. Advanced robots require significant investment in hardware, software, and integration.

Another challenge is workforce displacement. While robots create opportunities for new roles in programming, maintenance, and system design, they also replace jobs in traditional labor markets. This shift underscores the need for reskilling and education to prepare workers for a future where human-machine collaboration is the norm.

Ethics and safety are also key considerations. Ensuring that robots operate reliably in environments shared with humans requires strict standards and regulatory oversight. Additionally, as robots become more autonomous, questions about accountability and decision-making become increasingly complex.

The Future of Robotics

Looking ahead, robotics will become even more interconnected with other technologies. Integration with artificial intelligence will create systems that not only execute tasks but also predict needs and optimize performance. Advances in 5G and edge computing will allow robots to operate in real time with minimal latency, expanding their capabilities in fields such as autonomous vehicles and remote surgery.

The long-term vision includes robots that can learn through experience, adapt to new environments, and interact with humans in natural and intuitive ways. These machines will not just work for us but with us, creating opportunities that were previously unimaginable.

At BitPulse, we see robotics as one of the most transformative forces of the coming decades. Its impact will extend beyond economics, influencing culture, ethics, and even human identity. The question is not whether robots will become part of our lives, but how we will shape their role to ensure that technology serves humanity in meaningful and responsible ways.

The Expanding Role of Blockchain Beyond Cryptocurrency

When blockchain technology first entered the public consciousness, it was synonymous with Bitcoin and digital currencies. Many dismissed it as a niche solution for an emerging financial trend. Today, that perception has changed dramatically. Blockchain is no longer confined to cryptocurrency. It is evolving into a powerful tool for transparency, security, and trust across a range of industries.

Understanding the Core of Blockchain

At its simplest, blockchain is a decentralized ledger system that records transactions across multiple computers in a way that is secure, transparent, and nearly impossible to alter. Unlike traditional databases controlled by a single authority, blockchain operates on a peer-to-peer network. Every transaction is verified through consensus mechanisms and added as a block to a chain of previous records, forming an immutable history.

This structure provides two major benefits. First, it ensures integrity since tampering with one block would require altering every other block on the chain. Second, it removes the need for intermediaries, reducing costs and increasing efficiency in systems that traditionally rely on central authorities.

Blockchain in Supply Chain Management

One of the most promising applications of blockchain is in supply chain management. Companies across industries struggle with tracking the origin and movement of goods. Counterfeiting and lack of transparency cost billions each year. Blockchain can solve these problems by providing a secure, verifiable record of every step in the supply chain.

For example, in the food industry, blockchain enables retailers and consumers to trace products from farm to shelf. If a contamination issue occurs, companies can quickly identify the source and remove affected items, reducing waste and protecting public health.

Healthcare and Secure Data Sharing

Healthcare systems face the challenge of maintaining accurate and secure patient records. Data breaches not only compromise privacy but can also put lives at risk. Blockchain technology provides a way to store patient data in a tamper-resistant format while allowing authorized access when needed.

With blockchain, patients could have greater control over their medical information, granting temporary access to healthcare providers without relying on centralized databases that are vulnerable to hacking. This decentralized model ensures security and improves interoperability across healthcare systems.

Revolutionizing Digital Identity

Identity theft remains one of the most common forms of cybercrime. Current identity systems are fragmented, requiring users to maintain multiple accounts and credentials. Blockchain-based digital identity systems offer a solution by giving individuals control over their identity data. Users could share only the information necessary for a specific transaction, reducing exposure and protecting privacy.

This concept of self-sovereign identity could transform how we interact online, making verification faster, more secure, and less intrusive.

Smart Contracts and Automation

Smart contracts are another groundbreaking feature of blockchain. These self-executing agreements run on code and automatically enforce terms when predefined conditions are met. This eliminates the need for intermediaries such as lawyers or brokers, reducing costs and speeding up transactions.

Industries such as real estate, insurance, and finance are already exploring smart contracts to streamline processes. For instance, in real estate, a smart contract could handle everything from verifying property ownership to transferring funds, completing the sale in minutes instead of weeks.

Challenges and Future Outlook

Despite its potential, blockchain is not without challenges. Scalability remains a significant issue, as most blockchain networks struggle to handle high transaction volumes efficiently. Energy consumption is another concern, particularly for blockchains that rely on proof-of-work consensus mechanisms. Efforts to adopt greener alternatives such as proof-of-stake are gaining traction but require widespread adoption.

Regulation is another area of uncertainty. Governments worldwide are grappling with how to classify and govern blockchain-based systems without stifling innovation. Striking the right balance will determine how quickly the technology matures.

A Technology for the Next Decade

Blockchain is more than a technological trend. It represents a fundamental shift in how we manage data, trust, and security. While the early years were dominated by cryptocurrency, the next decade will see blockchain powering solutions in finance, healthcare, logistics, and identity management. Its ability to create transparency and eliminate intermediaries positions it as a cornerstone of the digital future.

At BitPulse, we see blockchain as part of a broader movement toward decentralization and user empowerment. Its success will depend on addressing technical challenges and establishing ethical, regulatory frameworks. One thing is clear: the impact of blockchain will extend far beyond digital currency. It is reshaping the foundation of the connected world.

The Rise of Biometric Authentication

The Future of Digital Security

The way we secure our digital lives is undergoing a profound transformation. Passwords, once the cornerstone of online security, are quickly becoming outdated in a world where cyberattacks are increasingly sophisticated. In their place, biometric authentication is emerging as a powerful alternative, promising both convenience and security. From fingerprint scans to facial recognition, this technology is reshaping how we protect our data and verify our identities.

What Is Biometric Authentication?

Biometric authentication uses unique physical or behavioral traits to verify identity. Unlike passwords or PINs, which can be forgotten or stolen, biometric identifiers such as fingerprints, facial features, iris patterns, and even voice characteristics are inherently tied to the individual. This makes them much harder to replicate or misuse.

Modern biometric systems rely on advanced algorithms and machine learning to process and compare these traits. When a user presents a biometric sample, such as a fingerprint, the system converts it into a digital template and matches it against stored records. If the patterns align, access is granted.

Why Biometrics Are Becoming Essential

The limitations of traditional authentication methods are well known. Passwords are vulnerable to phishing attacks, brute-force attempts, and human error. Many users reuse passwords across multiple platforms, increasing the risk of widespread breaches. Biometric authentication addresses these weaknesses by introducing factors that cannot be easily guessed or shared.

The demand for stronger security is not the only driving force. Consumers increasingly expect convenience. Unlocking a phone with a fingerprint or making a payment using facial recognition saves time and eliminates the need to remember complex credentials. This balance of security and ease of use explains why biometrics are being adopted at such a rapid pace.

Applications Across Industries

Biometric authentication is no longer limited to unlocking smartphones. Financial institutions are deploying voice recognition for customer verification during phone banking. Airports are implementing facial recognition systems to speed up check-ins and boarding while maintaining security. Healthcare organizations are using biometrics to ensure that patient records remain confidential and accurate.

Even workplaces are adopting biometric solutions for access control. Employees can enter secure areas with a fingerprint scan instead of carrying physical badges, reducing the risk of lost or stolen credentials. Retailers are exploring biometric payment systems that allow customers to complete transactions with a glance or a touch, creating a seamless shopping experience.

Challenges and Concerns

Despite its advantages, biometric authentication is not without challenges. Privacy is a major concern. Unlike passwords, biometric data cannot be changed if compromised. A stolen fingerprint or facial template could pose a permanent risk if not properly protected. This raises questions about how organizations store and secure biometric information.

Regulation and compliance are critical in addressing these concerns. Laws like the General Data Protection Regulation (GDPR) in Europe and similar frameworks elsewhere impose strict requirements for collecting and handling biometric data. Organizations must ensure that consent is obtained and that systems are designed to minimize the risk of data breaches.

Another challenge lies in accuracy. While modern biometric systems are highly reliable, false positives and false negatives can occur. Factors such as poor lighting, skin conditions, or changes in appearance can affect performance, requiring systems to incorporate fallback authentication methods.

The Future of Digital Identity

Looking ahead, biometric authentication is likely to become even more integrated into everyday life. Advances in artificial intelligence will improve accuracy and speed, while emerging technologies like multimodal biometrics, which combine multiple identifiers such as fingerprint and voice, will offer enhanced security.

There is also growing interest in decentralized identity systems, where users control their biometric data instead of storing it on centralized servers. This approach could mitigate the risks associated with large-scale data breaches, providing a more secure and user-friendly model for digital identity.

At BitPulse, we believe biometric authentication is not just a trend but a fundamental shift in how we approach security. As the digital world expands, protecting identity becomes one of the greatest challenges of our time. Biometrics offer a path forward, but they also demand careful planning, ethical considerations, and robust safeguards. The future of security will be defined not by what we remember, but by who we are.

The Future of 6G

Building a Hyperconnected World

Every technological leap builds on the foundation of its predecessor. Just as 4G brought mobile broadband to the masses and 5G opened the door to ultra-low latency and massive IoT connectivity, 6G is shaping up to be the next transformative step in global communication. While still in its early stages, the vision for 6G goes far beyond faster downloads. It represents an entirely new framework for connectivity that will enable applications we are only beginning to imagine.

What Is 6G and How Is It Different?

6G refers to the sixth generation of wireless technology, expected to launch commercially by 2030. While 5G offers speeds in the range of gigabits per second, 6G aims to deliver data rates up to 100 times faster, potentially reaching terabit-per-second speeds. This is not just about speed. It is about creating a network capable of supporting real-time, immersive experiences on a global scale.

The latency improvements are equally significant. While 5G brought latency down to about one millisecond, 6G aims for microsecond-level latency. This leap will make previously impossible applications a reality, such as instant remote surgeries, fully autonomous transportation systems, and real-time holographic communication.

Key Technologies Powering 6G

Several advanced technologies will form the backbone of 6G networks. Terahertz frequency bands will provide the bandwidth necessary for enormous data throughput. These frequencies allow for incredible speed but pose challenges in signal range and penetration, requiring innovative solutions for network architecture.

Artificial intelligence will play a central role in managing 6G networks. Intelligent systems will handle everything from traffic optimization to predictive maintenance, creating self-healing networks that adapt dynamically to user demand and environmental conditions.

Edge computing will also become more important than ever. By processing data closer to the source, 6G networks can achieve the ultra-low latency required for time-critical applications. This integration of edge and cloud resources will create a distributed computing ecosystem capable of supporting billions of interconnected devices simultaneously.

Applications That Will Define the 6G Era

The most compelling aspect of 6G is not its technical specifications but the experiences it will enable. Extended reality environments, which combine augmented, virtual, and mixed reality, will become mainstream, offering immersive interactions in education, entertainment, and professional collaboration.

Healthcare will see revolutionary advancements through telepresence and remote surgeries performed with robotic precision. Autonomous vehicles will benefit from near-instantaneous communication, allowing them to coordinate with one another and with smart infrastructure for safer, more efficient transportation systems.

Smart cities will evolve into truly intelligent environments, where data flows seamlessly between homes, businesses, transportation, and energy grids. These networks will not only respond to human needs but anticipate them, creating adaptive systems that improve quality of life while conserving resources.

Challenges on the Road to 6G

Despite its promise, the path to 6G is filled with challenges. Developing the hardware capable of operating at terahertz frequencies will require breakthroughs in materials science and chip design. Building the infrastructure for widespread deployment will involve enormous investment and global cooperation.

Energy efficiency is another concern. With the number of connected devices expected to reach trillions, creating sustainable networks that minimize energy consumption is essential. Regulatory frameworks will also need to evolve to manage spectrum allocation and ensure security in an increasingly interconnected world.

The Vision Ahead

6G is not just an upgrade to existing networks. It is a platform for the future of digital interaction, where physical and virtual realities converge, and connectivity becomes as fundamental as electricity. While the journey will take years and require collaboration across industries and governments, the potential rewards are immense.

At BitPulse, we see 6G as more than a technological advancement. It represents a shift in how humanity connects, communicates, and collaborates. The networks of tomorrow will not simply deliver information. They will create environments where innovation thrives, and possibilities are limited only by imagination.

The Evolution of Artificial Intelligence in Everyday Life

Artificial Intelligence has long been portrayed as futuristic, something that belongs in science fiction or advanced research labs. Today, that vision has shifted to reality. AI is no longer a concept confined to experimental environments. It is integrated into the fabric of daily life, powering the tools and systems we interact with every day. From recommendation engines that shape what we watch to predictive analytics that guide healthcare, the reach of AI is profound and growing.

Understanding the Basics of AI

Artificial Intelligence refers to systems designed to simulate human intelligence. Unlike traditional software that follows pre-defined rules, AI learns from data and adapts over time. This learning capability allows AI to handle complex tasks such as natural language processing, image recognition, and autonomous decision-making.

Machine learning, a subset of AI, plays a critical role in this transformation. By analyzing vast amounts of data, machine learning algorithms identify patterns and make predictions with remarkable accuracy. Deep learning, which uses layered neural networks, has taken this a step further, enabling breakthroughs in voice recognition, language translation, and computer vision.

AI in Everyday Applications

AI is quietly embedded in the tools we use most often. Virtual assistants like Siri, Alexa, and Google Assistant interpret voice commands and manage everything from reminders to smart home devices. Streaming platforms leverage AI to analyze viewing habits, suggesting content tailored to personal preferences. These experiences feel seamless because AI operates in the background, continuously learning and optimizing for better engagement.

In retail, AI drives personalized shopping experiences by predicting customer behavior and recommending products. Financial institutions rely on AI to detect fraudulent transactions, ensuring security and trust. Even navigation apps use AI to calculate the fastest routes by analyzing real-time traffic data and historical trends.

Healthcare and AI: A Transformative Partnership

One of the most promising areas for AI is healthcare. From diagnostic imaging to drug discovery, AI systems are reducing the time and cost associated with critical medical processes. Algorithms can identify anomalies in medical scans with accuracy that rivals human specialists. This assists doctors in diagnosing conditions earlier, improving treatment outcomes.

AI also plays a vital role in telemedicine and patient monitoring. Wearable devices equipped with AI can track vital signs, alerting physicians when irregularities occur. These innovations are particularly valuable in rural or underserved regions where access to healthcare professionals is limited.

Ethical and Social Implications

While the benefits of AI are clear, its adoption raises ethical concerns that cannot be ignored. Privacy is a major issue. The data required to train AI models often includes sensitive personal information, making security paramount. There is also the question of bias. If the data fed into AI systems is skewed, the decisions generated by those systems can perpetuate or even amplify existing inequalities.

Transparency and accountability in AI decision-making are essential. As algorithms take on more critical roles, from loan approvals to job recruitment, understanding how these decisions are made becomes a matter of fairness and trust.

The Road Ahead for AI

The future of AI will likely involve greater integration with other emerging technologies. Combining AI with edge computing will enable faster decision-making for applications like autonomous vehicles and industrial automation. In the realm of creative work, generative AI models are already producing art, music, and even entire articles, blurring the line between human and machine creativity.

Regulation will play a key role in shaping this future. Governments and organizations must work together to create frameworks that encourage innovation while protecting individual rights and ensuring ethical standards.

At BitPulse, we view AI as more than a technological advancement. It is a shift in how humanity approaches problem-solving and creativity. The challenge is not just building smarter systems but ensuring that these systems serve society responsibly. AI is no longer about what machines can do, but about what they should do.

The Rising Power of Neural Interfaces

The Future of Human-Machine Interaction

Technology has always been about extending human capabilities. From the first tools carved from stone to the smartphones in our pockets, every innovation has aimed to make life easier, faster, and more connected. Today, one of the most ambitious frontiers in technology is the development of neural interfaces, systems that create a direct communication link between the human brain and external devices. This is not science fiction anymore. It is a rapidly advancing field with implications that could transform how we live, work, and experience the world.

What Are Neural Interfaces?

Neural interfaces, also known as brain-computer interfaces (BCIs), are technologies that enable direct interaction between the brain and machines without the need for traditional input methods like keyboards or touchscreens. These systems interpret electrical signals generated by neurons and translate them into commands that devices can understand.

The concept may sound futuristic, but the foundation is already here. Medical applications such as cochlear implants and deep brain stimulators have been used for years to restore hearing or manage conditions like Parkinson’s disease. Recent breakthroughs are pushing beyond medical use, opening possibilities for everyday interaction with digital systems.

How Do They Work?

At the core of neural interfaces are sensors that detect neural activity. These signals are then processed using advanced algorithms, often powered by machine learning, to decode patterns and translate them into actionable instructions. Depending on the system, the interface can be invasive, involving implanted electrodes, or non-invasive, relying on external devices like EEG headsets.

The invasive approach offers higher accuracy and faster response times but comes with surgical risks. Non-invasive systems are safer but face challenges in signal clarity. Researchers are working to improve both methods to achieve seamless integration between mind and machine.

Potential Applications

The range of potential applications for neural interfaces is astonishing. In healthcare, BCIs could help restore mobility to patients with paralysis by enabling them to control prosthetic limbs with their thoughts. Communication systems for individuals with speech impairments could allow words to be formed directly from neural signals, bypassing traditional language pathways entirely.

Beyond healthcare, the technology has implications for gaming, virtual reality, and even work productivity. Imagine being able to design complex structures, control robots, or navigate digital environments using only your thoughts. Neural interfaces could redefine human-computer interaction and eliminate the barriers of physical input devices.

Challenges and Ethical Considerations

As with any transformative technology, neural interfaces come with significant challenges. Technical limitations like signal accuracy, device size, and power consumption must be addressed. There are also questions about privacy and security. If thoughts can be decoded, how do we prevent unauthorized access? Who owns the data generated by the human brain?

Ethics play a major role in shaping this future. The idea of reading or influencing neural activity raises profound concerns about consent and autonomy. These issues must be carefully managed through regulations and transparent innovation practices.

The Road Ahead

The journey toward widespread adoption of neural interfaces will take time, but the progress is undeniable. Tech companies and research institutions are investing heavily in this area, and prototypes are already demonstrating real-world functionality. As artificial intelligence advances, its integration with BCIs could create systems that learn from and adapt to users in unprecedented ways.

The ultimate vision is a world where the boundary between human cognition and digital systems becomes almost invisible. Such a reality could revolutionize how we learn, work, and connect. It could also blur the line between biological and artificial intelligence, sparking new debates about what it means to be human in a hyper-connected era.

At BitPulse, we believe that neural interfaces represent not just a technological shift but a philosophical one. They invite us to imagine a future where our thoughts are no longer private actions but active tools for shaping reality. Whether that future excites or unsettles you, one thing is certain: it is coming faster than most of us expect.

The Next Frontier in Computing

How Quantum Technology is Changing the Digital Landscape

Technology is often defined by its limits. Every leap forward begins when those limits are challenged. Today, the boundary being tested is not speed or storage capacity but the very principles of computation itself. This is the era of quantum computing, a breakthrough that has the potential to transform industries, redefine security, and accelerate innovation in ways that traditional systems cannot match.

What Makes Quantum Computing Different

Classical computers operate using bits that represent either a zero or a one. This binary approach has served us well for decades, but it comes with limitations. Quantum computers work differently. They use quantum bits, or qubits, which can exist in multiple states at the same time. This phenomenon, known as superposition, allows quantum systems to process vast amounts of information simultaneously.

Quantum computers also take advantage of entanglement, a principle where two qubits become linked, and the state of one affects the other regardless of distance. This property opens the door to levels of parallelism and efficiency that are impossible for classical architectures to achieve.

Why It Matters

Quantum computing is not just a faster version of existing systems. It is an entirely new way of solving problems. Tasks that would take classical computers thousands of years could be completed in seconds with a quantum machine. For sectors such as pharmaceuticals, finance, and cybersecurity, this capability is a game-changer.

Imagine being able to simulate molecular structures at an atomic level, leading to the rapid development of new drugs and materials. Financial institutions could run complex risk models in real time, allowing them to make better investment decisions. In logistics and transportation, quantum optimization could cut costs and improve efficiency on a global scale.

The Cybersecurity Challenge

While the potential benefits are enormous, quantum computing also presents serious risks, particularly in cybersecurity. Most modern encryption systems rely on mathematical problems that are extremely difficult for classical computers to solve. Quantum computers, however, could crack these codes with ease. This means that the encryption standards protecting everything from personal banking to national security could become obsolete.

Researchers are already working on quantum-resistant algorithms, but the transition will not be simple. It will require coordination across industries and governments to ensure that the digital world remains secure in the face of this new threat.

Current State and Roadblocks

Despite the excitement, quantum computing is still in its infancy. Building a practical, scalable quantum computer is a massive challenge. Qubits are extremely sensitive to environmental noise, which can cause errors in calculations. To counter this, systems must operate at temperatures near absolute zero and require complex error correction techniques.

Leading companies and research institutions are investing heavily to overcome these barriers. Tech giants like IBM, Google, and Microsoft are racing to develop quantum platforms that can be accessed through the cloud. Startups are also entering the field with specialized approaches, from trapped ion systems to superconducting circuits.

Looking Ahead

The future of quantum computing will not be about replacing classical systems but complementing them. For everyday tasks like running applications or browsing the internet, traditional computers remain efficient and cost-effective. Quantum machines will take on the specialized problems that classical architectures struggle with, creating a hybrid ecosystem where both technologies coexist.

As progress continues, the impact of quantum computing will ripple across every industry. It will influence everything from medicine and energy to artificial intelligence and space exploration. The organizations that understand and prepare for this shift will lead the next wave of digital transformation.

At BitPulse, we see quantum technology not as a distant dream but as an approaching reality. The question is no longer if it will happen, but how quickly we can adapt to the possibilities and challenges it brings.

The Hidden Backbone of Connectivity

Inside the World of Fiber Optic Networks

Every digital interaction we have today feels instantaneous. We send messages, stream videos, attend virtual meetings, and rarely think about the infrastructure that makes this possible. Beneath this seamless experience lies an intricate system of fiber optic networks, the silent backbone of global connectivity. Without it, the internet as we know it would not exist. Understanding how fiber optics work reveals the incredible engineering that powers our digital world.

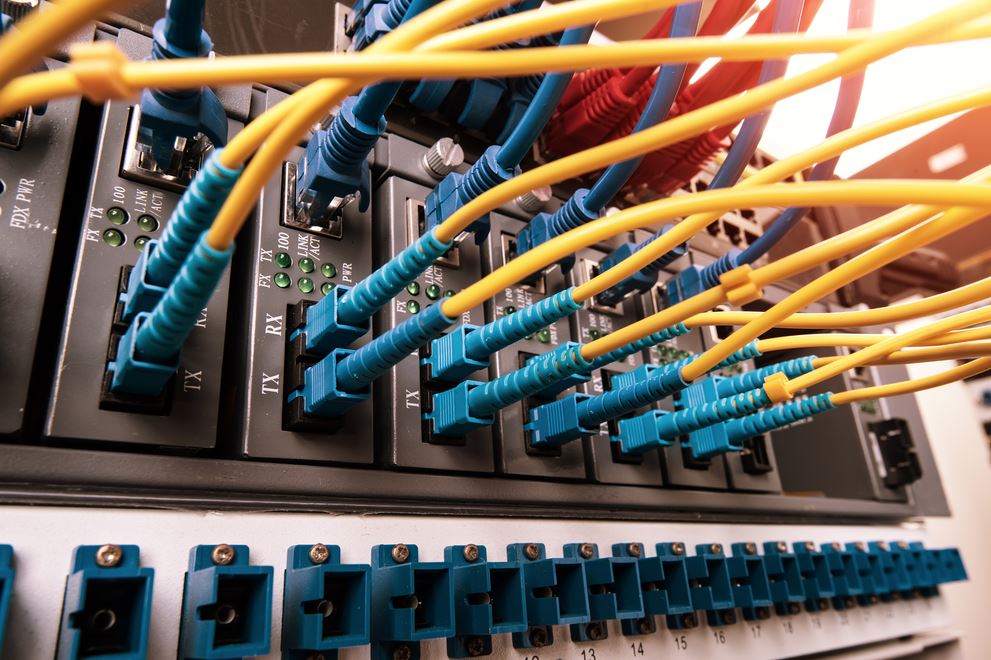

What Makes Fiber Optics So Important

Fiber optic cables transmit data using pulses of light instead of electrical signals. Unlike traditional copper wires, which rely on electricity, fiber optics use glass or plastic strands to send information at the speed of light. This makes them exceptionally fast, capable of handling enormous amounts of data with minimal latency.

The demand for fiber optics has grown exponentially because of the sheer volume of data generated daily. Cloud computing, streaming services, smart devices, and 5G networks all rely heavily on high-capacity connections. Copper cables simply cannot keep up with these requirements, and that is why fiber optics have become essential.

The Science Behind the Speed

At the heart of fiber optics is the principle of total internal reflection. Light signals travel through the core of a fiber, bouncing off the internal walls without escaping. This ensures that the signal can move across long distances with very little loss of quality. To maintain performance, cables are often bundled in groups and encased in protective layers to withstand environmental stress.

Modern fiber networks also employ techniques like wavelength division multiplexing. This technology allows multiple signals to travel simultaneously through a single fiber by assigning different wavelengths of light to each data stream. The result is a dramatic increase in bandwidth without requiring additional physical cables.

Global Impact and Infrastructure

Fiber optic cables span continents, oceans, and cities. Submarine cables laid on the ocean floor connect entire regions, enabling real-time communication across the globe. These systems are monumental engineering achievements, often involving thousands of miles of cable and advanced repeaters that keep signals strong throughout the journey.

On land, fiber networks form the foundation of metropolitan connectivity. Internet service providers, businesses, and data centers depend on them to deliver reliable, high-speed internet. As more organizations move to cloud-based systems and remote work becomes standard, fiber infrastructure will only become more critical.

The Role in Emerging Technologies

The future of technology depends on ultra-fast, low-latency connections, and fiber optics provide the foundation for that vision. Technologies like 5G, autonomous vehicles, and virtual reality require immense amounts of data to move quickly and consistently. Wireless systems may deliver the last mile, but they still need fiber optics to support the core network. Without it, these innovations cannot scale effectively.

Artificial intelligence and machine learning systems also benefit from fiber networks. Training advanced models requires transferring massive datasets between distributed computing environments. Fiber optics make this process efficient, reducing the time and cost associated with high-performance computing.

Challenges and Next Steps

Despite its advantages, building and maintaining fiber infrastructure is expensive and time-consuming. Laying new cables involves navigating physical obstacles, regulatory hurdles, and environmental considerations. Rural and underserved areas often struggle to access fiber networks because the cost of deployment outweighs the short-term return on investment.

To address this gap, hybrid solutions are emerging. Technologies like fixed wireless access and satellite broadband complement fiber networks by extending connectivity to regions where laying cables is impractical. However, fiber remains the gold standard for speed, stability, and future scalability.

A Future Built on Light

Fiber optics represent more than just a faster way to browse the internet. They are the nervous system of our digital society, carrying the signals that power communication, commerce, entertainment, and innovation. As demand for connectivity grows, these networks will continue to evolve, becoming denser, faster, and more intelligent.

At BitPulse, we believe that understanding the hidden systems shaping our lives is essential. Fiber optics may not capture headlines every day, but without them, the global economy and the digital experiences we take for granted would collapse. They are not just wires. They are the invisible threads holding our connected world together.

The Silent Revolution of Edge Computing

Technology has a way of moving in cycles. For decades, the industry has shifted between centralization and decentralization, always searching for the most efficient way to process information. Today, we are witnessing one of the most important shifts in recent memory: the rise of edge computing. It is a silent revolution taking place at the intersection of connectivity, data, and performance, redefining how businesses and individuals interact with the digital world.

What Is Edge Computing?

At its core, edge computing refers to processing data closer to its source rather than sending it across vast networks to centralized data centers. Traditional cloud computing models rely on large, remote servers to handle complex workloads. While the cloud remains essential, it introduces latency, bandwidth costs, and potential bottlenecks when billions of connected devices are generating data simultaneously.

Edge computing solves this by moving computation to the "edge" of the network, often inside the device itself or at a local gateway. Think of it as shortening the distance between information and intelligence. By processing data locally, systems respond faster, reduce dependence on large-scale infrastructure, and improve reliability in scenarios where real-time decision-making is critical.

Why It Matters Today

The explosion of the Internet of Things is the single most significant driver of edge computing adoption. Smart factories, autonomous vehicles, wearable health devices, and connected homes all produce staggering amounts of data. Sending all this information to distant data centers would not only be costly but also impractical. Many of these applications require decisions in milliseconds. A self-driving car cannot afford the delay of waiting for a cloud server to tell it when to brake.

Edge computing delivers the speed and efficiency required by these scenarios. It minimizes latency and ensures that systems continue functioning even when internet connectivity is unreliable or unavailable. This is more than an incremental improvement. For many industries, it is the difference between innovation and stagnation.

Industries Leading the Charge

Several sectors have embraced edge computing as a core element of their digital transformation strategies. In manufacturing, real-time monitoring of machines reduces downtime and improves operational efficiency. Sensors on assembly lines analyze data instantly, detecting anomalies before they lead to costly failures.

Healthcare is another area where edge solutions are saving lives. Portable diagnostic tools and remote monitoring devices can process patient data locally, providing immediate alerts to medical staff. This is especially critical in rural or underserved regions where access to centralized healthcare infrastructure is limited.

Even entertainment is feeling the impact. Online gaming and virtual reality demand ultra-low latency to create immersive experiences. Edge servers positioned near players dramatically improve response times, enhancing user satisfaction and reducing frustration.

The Challenges Ahead

Despite its promise, edge computing comes with unique challenges. Security is one of the most pressing concerns. Distributing computing power across countless devices creates more entry points for cyberattacks. Protecting data at the edge requires new approaches to encryption, authentication, and monitoring.

Standardization is another obstacle. With so many different hardware platforms and software environments, interoperability remains a complex problem. Without clear standards, deploying edge solutions at scale can be slow and costly.

Finally, there is the matter of cost. While edge computing reduces dependency on centralized infrastructure, it often requires significant investment in new hardware and specialized software. Organizations must weigh these costs against the long-term benefits of faster, more efficient systems.

The Future of Computing Is Closer Than Ever

Edge computing is not a replacement for the cloud. Instead, it complements it. The future will be a hybrid model where cloud and edge work together, each serving roles that play to their strengths. The cloud will remain vital for large-scale data storage and analytics, while the edge will dominate in real-time, latency-sensitive environments.

For businesses, adopting edge strategies today means staying competitive tomorrow. For individuals, it means enjoying faster, smarter, and more reliable technologies in everyday life. This silent revolution may not make headlines every day, but its influence will shape the next decade of digital innovation.

At BitPulse, we believe the best way to understand the future is to watch where technology is moving quietly. Edge computing is one of those moves, and its ripple effects are already reaching every corner of the connected world.

The Invisible Engines of Progress

How Microprocessors Drive Our World

There is something fascinating about how the smallest elements can shape the largest changes. Microprocessors are a perfect example. These tiny pieces of silicon, often no larger than a coin, have transformed the way we live, work, and think. They are the invisible engines running quietly behind the scenes, powering everything from your smartphone to the most advanced artificial intelligence systems.

The Heart of Digital Intelligence

A microprocessor is more than a collection of transistors and circuits. It is the brain of modern computing. With every clock cycle, it processes millions or even billions of instructions, enabling software to translate human intention into digital action. Whether you are sending a message, streaming a video, or training a machine learning model, a microprocessor is orchestrating the process with precise timing and efficiency.

What makes them so powerful is not just speed but versatility. Early processors were designed for single tasks. Today, they are general-purpose units capable of handling complex workloads across multiple domains. From consumer devices to industrial machines, they deliver performance that seems almost limitless, yet their size keeps shrinking.

A Journey Through Innovation

The story of microprocessors is a tale of relentless progress. When Intel introduced the 4004 in 1971, it carried a modest 2,300 transistors and ran at 740 kHz. It was groundbreaking for its time, but if you compare it to modern processors with billions of transistors and clock speeds beyond 5 GHz, the scale of advancement is staggering.

This acceleration follows Moore’s Law, a principle predicting that transistor density on chips would double roughly every two years. While the pace has slowed in recent years, the industry has found creative ways to maintain progress. Smaller fabrication nodes, 3D architectures, and advanced lithography have allowed microprocessors to keep evolving without hitting a complete wall.

The Role in Everyday Life

It is easy to overlook microprocessors because they are hidden inside the things we use daily. Your car relies on dozens of them to manage everything from engine control to infotainment systems. Smart appliances in homes operate through embedded processors that ensure energy efficiency and connectivity. Even medical devices, often invisible to patients, depend on these tiny circuits to deliver life-saving precision.

The significance goes beyond convenience. Microprocessors have enabled automation in manufacturing, breakthroughs in scientific research, and the rapid growth of the internet economy. They are at the heart of innovation across every sector.

Challenges and the Future Ahead

Despite the incredible progress, the road ahead presents challenges. As chips become smaller, managing heat and energy efficiency becomes more difficult. Security is another major concern, as vulnerabilities at the hardware level can compromise entire systems.

The industry is responding with solutions like heterogeneous computing, where CPUs work alongside GPUs and specialized accelerators for tasks like AI processing. There is also growing interest in quantum computing, which could redefine what processors are capable of achieving.

Why It Matters

Understanding microprocessors is not just for engineers or tech enthusiasts. It is about recognizing the foundation of the digital world we all depend on. Every app, every smart device, every cloud service runs on this silent architecture. Without it, the digital era would collapse.

BitPulse was created to bring clarity to the technology that shapes our lives. In a world where complexity often overwhelms, our goal is simple: to explain the unseen forces that matter most. Microprocessors are one of those forces, and their story is far from over.